On August 6, 2025, Amnesty International released a report revealing that X’s design and policy choices significantly contributed to the spread of inflammatory and racist narratives targeting Muslims and migrants following the July 29, 2024, Southport attack in the UK.

The analysis, based on X’s open-source code, found that the platform’s content-ranking algorithms prioritise engagement-driven content, amplifying harmful narratives without adequate safeguards to mitigate human rights risks.

The Southport stabbing by Axel Rudakubana, a British citizen born in Cardiff, sparked far-right riots across the UK, fueled by false claims on X that the suspect was a Muslim asylum seeker. Posts speculating on the suspect’s identity as a refugee or foreign national garnered 27 million impressions within 24 hours, per Amnesty’s findings. A post from the “Europe Invasion” account, known for anti-immigrant and Islamophobic content, received over four million views, exacerbating violence against mosques, Islamic buildings, and migrant hotels.

“We will not be intimidated by racists. The riots were targeted, organized and racially motivated attacks.”

Kash is part of the Belfast Islamic Centre, a vibrant place for community, prayer and dialogue.

It was targeted last year in the racist violence that swept Belfast in… pic.twitter.com/qHe854sj2s

— Amnesty UK (@AmnestyUK) August 6, 2025Amnesty’s technical analysis highlighted that X’s recommender system lacks mechanisms to assess content’s potential to cause harm until user reports accumulate. Pat de Brun, head of Big Tech Accountability at Amnesty, stated, “X’s algorithmic design and policy choices contributed to heightened risks amid a wave of anti-Muslim and anti-migrant violence.”

A year after the racist violence that followed the murder of three young girls in Southport, @amnesty has found that social media platform X played a key role in spreading false narratives & harmful content, contributing to racist violence targeting Muslims & migrants in the UK.…

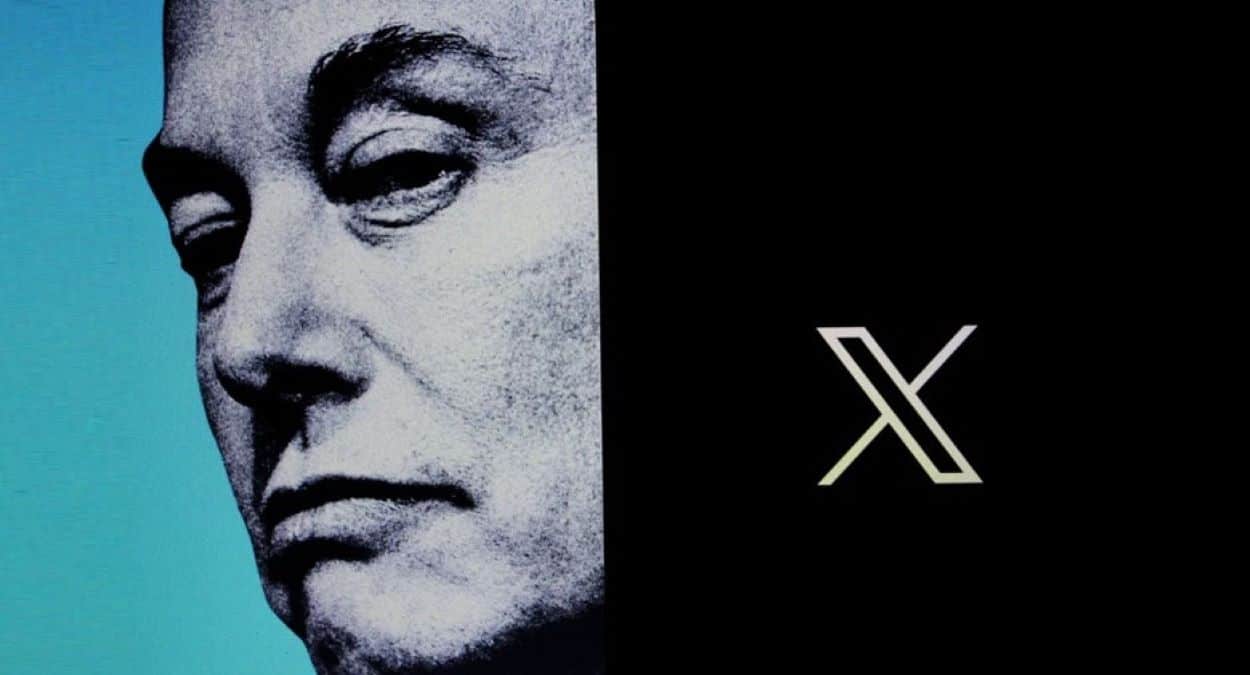

— Amnesty International (@amnesty) August 6, 2025Since Elon Musk’s 2022 takeover, X has reduced content moderation staff, reinstated banned accounts like that of far-right figure Stephen Yaxley-Lennon (Tommy Robinson), and disbanded the Trust and Safety Advisory Council, weakening oversight.

The Southport tragedy occurred amid significant policy shifts at X, including layoffs of trust and safety engineers. These changes have created a “fertile ground” for harmful narratives, as noted in the report, posing ongoing human rights risks. The reinstatement of accounts previously banned for hate speech has amplified divisive content, undermining efforts to curb misinformation and violence.