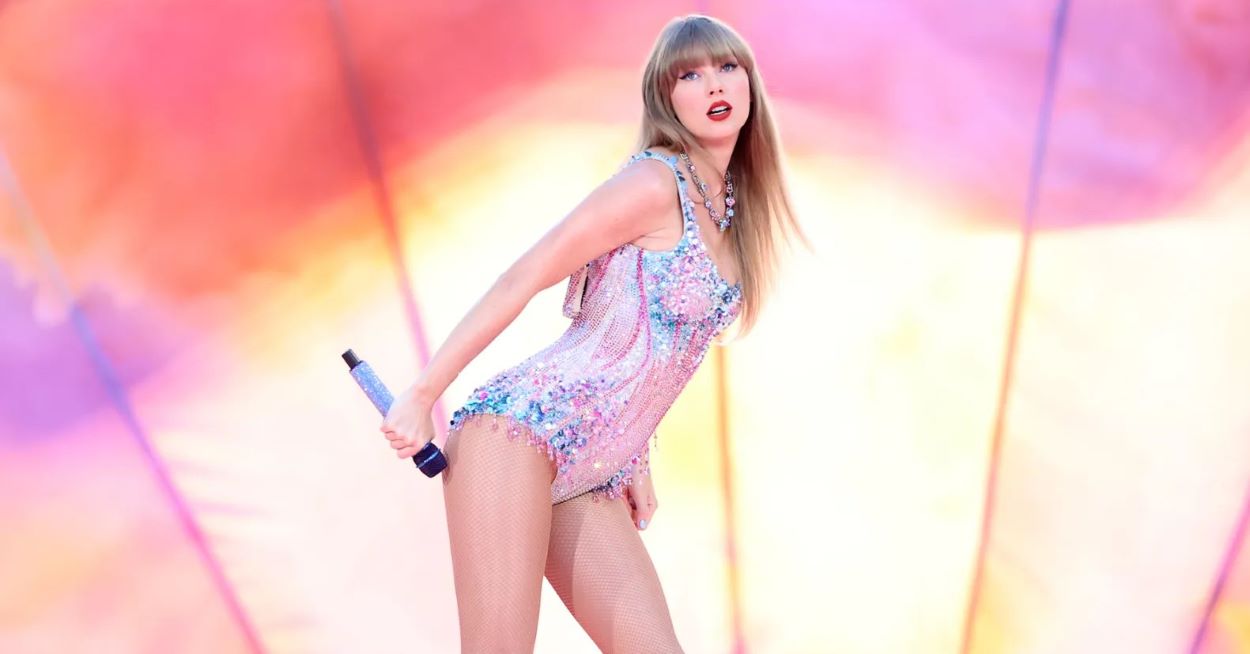

Artificial intelligence-created pornographic images of Taylor Swift were spread on X, formerly Twitter, leading to a Swifties blackout on the platform.

On Saturday, a search for “Taylor Swift” yielded no results, displaying a message indicating the issue wasn’t user-related.

The AI-generated images, reportedly from Celeb Jihad, have been circulating online. They quickly spread to social media platforms like X and Instagram, inciting Swift fans’ outrage.

Swifties have expressed deep concern, questioning the legality of such actions and calling for immediate reporting and removal of the images. They highlighted the need to protect women from this type of exploitation.

While federal laws against deepfake pornography are absent, several states have legislation in place. California and Illinois allow victims to sue for defamation.

Despite attacks from NGOs and women’s rights activists, the site producing the images remains operational. Social media platforms where these images appear have yet to address the issue publicly.

Swift and her team haven’t commented on this intrusion. The government’s involvement in blocking the search remains unconfirmed, despite rumours of White House concern. This controversy highlights the urgent need for legislation against such AI-generated content.