OpenAI has dissolved a key safety team focused on future AI systems following the exit of its leaders, including co-founder Ilya Sutskever.

The group, known as the superalignment team, will no longer function as a separate unit but will be integrated into broader research efforts to enhance safety, OpenAI informed Bloomberg News. This move aims to distribute the safety-focused tasks more extensively within the company.

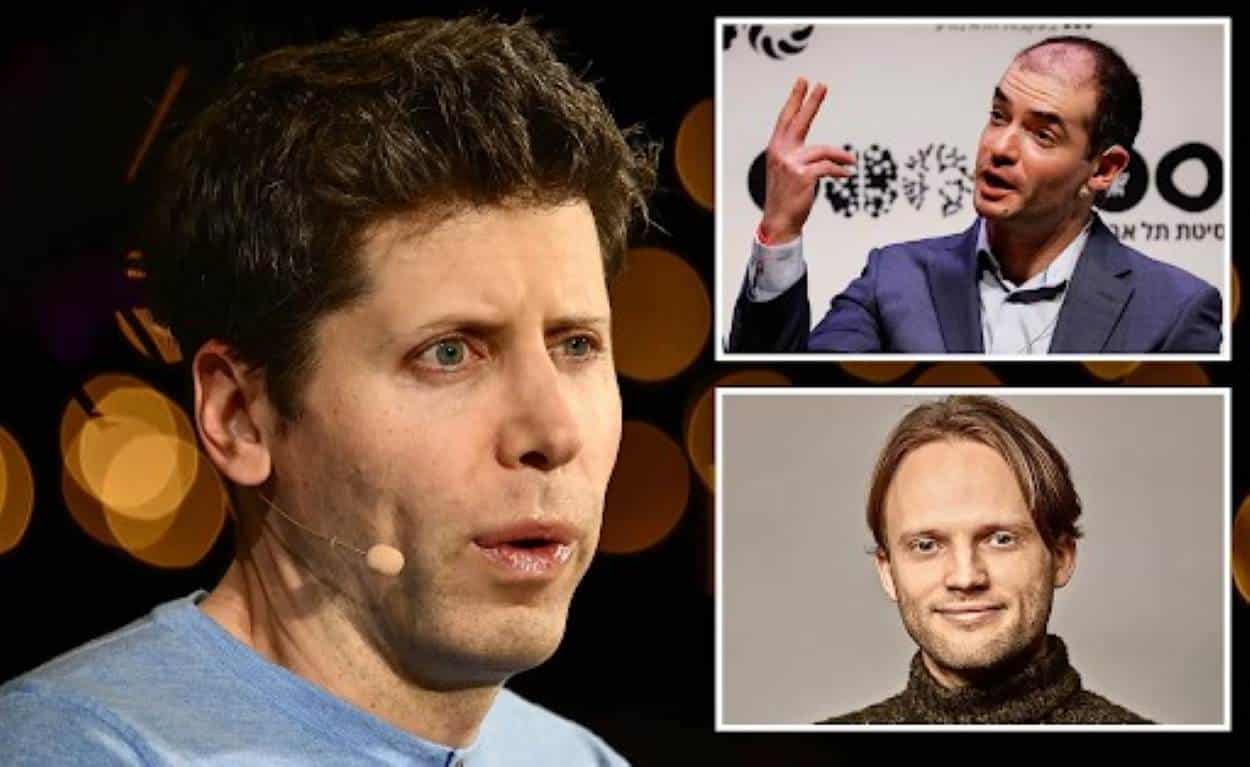

The dissolution occurs amid recent high-profile departures, including Sutskever’s, which have reignited debates over the company’s pace of development versus its safety protocols. Sutskever left after disagreements with CEO Sam Altman about the speed of AI development, announcing his departure this Tuesday.

Shortly after, Jan Leike, another key figure, resigned, citing the struggle for resources and the growing challenges in conducting crucial research. In response to Leike’s concerns, Altman acknowledged the shortcomings and reaffirmed the company’s commitment to improvement.

Furthermore, other team members, Leopold Aschenbrenner and Pavel Izmailov, have also left. OpenAI has since appointed Jakub Pachocki as the new chief scientist and placed John Schulman in charge of continuing alignment research.

Despite these changes, OpenAI remains committed to advancing artificial general intelligence (AGI) safely. Altman expresses confidence in Pachocki’s leadership in achieving AGI that benefits all. The company also maintains other safety-related teams and initiatives, underscoring its ongoing focus on mitigating AI risks.