Harvard students have developed an application called I-Xray for Ray-Ban Meta smart glasses. This application exposes sensitive personal information by identifying individuals through the glasses’ camera. This app retrieves addresses and phone numbers without the subjects’ awareness.

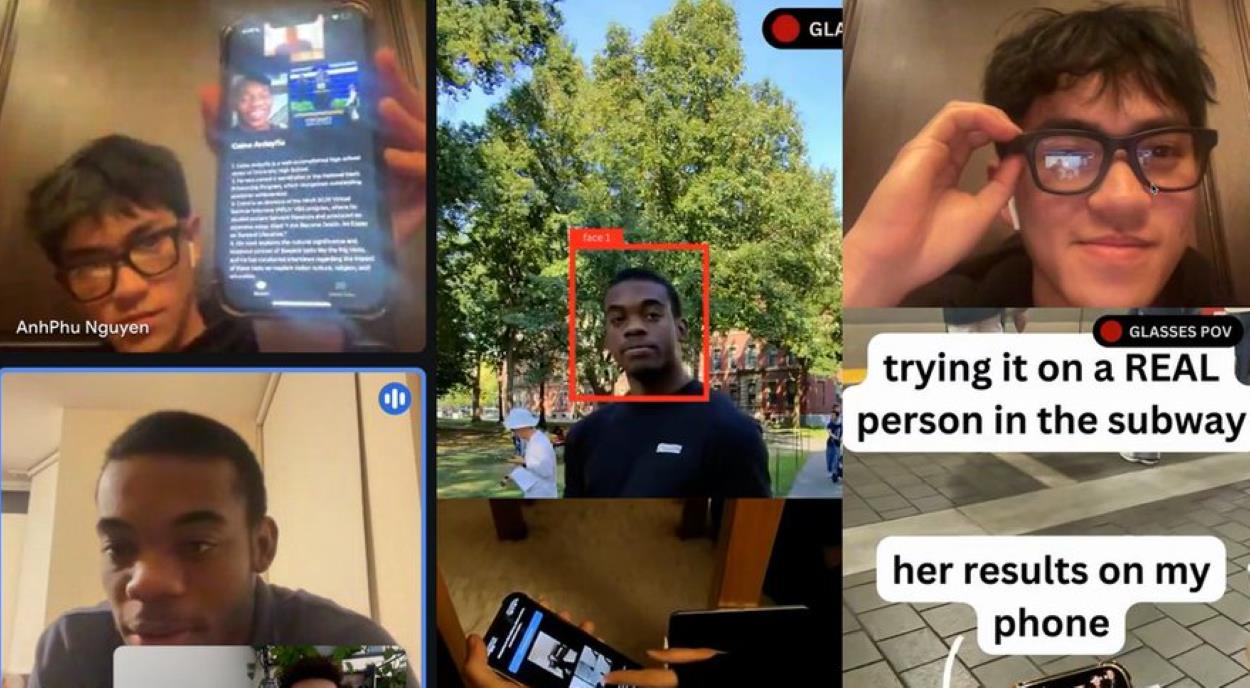

Two engineering students from Harvard demonstrated I-Xray in a video on X (formerly known as Twitter), using artificial intelligence (AI) to recognize faces. They stressed that they would not release the app publicly, aiming to highlight the potential privacy risks associated with AI-powered wearable devices that feature discreet cameras.

The app, I-Xray, uses AI for facial recognition and processes visual data to uncover personal information. This process is known as doxing—derived from “dropping dox,” which involves publishing private documents about someone without their consent.

The developers explained that while they demonstrated I-Xray with Ray-Ban Meta smart glasses, the application could work with any smart glasses equipped with discreet cameras. The app utilizes AI technologies like PimEyes and FaceCheck for reverse facial recognition, linking individuals’ faces to their online images and pulling data from associated URLs.

An additional large language model (LLM) analyzes these URLs to pull detailed personal information such as names, occupations, and addresses. This model also combs through publicly available government records, including voter registrations, and uses an online tool called FastPeopleSearch.

In their video demonstration, the app’s developers, AnhPhu Nguyen and Caine Ardayfio, showed how I-Xray could discern personal data about people they met on the street simply by activating the camera and engaging in conversation.

A document the developers shared mentions that combining LLMs with reverse face search technology allows for automatic and comprehensive data extraction, which is impossible with traditional methods.

While the students have chosen not to make the app publicly available, they developed it to underscore the privacy risks posed by AI-enabled devices that can record people covertly. They also noted that despite their intentions to prevent misuse, others could still develop and misuse similar technologies.